We are joined today by James Maitland, a leading expert in the application of robotics and IoT in medicine, whose work is at the forefront of the technological revolution in healthcare. He brings a unique perspective on how the convergence of cloud computing, artificial intelligence, and data is not just an upgrade, but a fundamental reshaping of the industry. In our conversation, we will explore the tangible return on investment that AI solutions are delivering, the critical challenge of building trust with clinicians, how deep learning is radically altering the economics of drug discovery, and the ways these intelligent systems are empowering patients to take a more active role in their own care.

You mentioned that solutions built on platforms like AWS and GCP deliver a clear ROI. Can you walk us through a specific example, perhaps an LLM automating administrative workflows, and explain how an organization measures the tangible financial benefits and improved health outcomes?

Absolutely. Imagine a bustling primary care clinic where clinicians spend nearly half their day on administrative tasks. We can deploy a multi-agent system built on a large language model that integrates directly into their workflow. During a patient visit, the system processes the audio from the conversation, interprets the dialogue using natural language processing, and automatically drafts the clinical notes in the Electronic Health Record. It can simultaneously summarize the patient’s complex history and even pre-populate insurance forms. The ROI here is incredibly direct. We measure the reduction in time clinicians spend on documentation, which often frees up several hours a week per doctor. This translates into either more patients seen per day or, more importantly, more quality time spent with each patient. The financial benefit is clear from the increased capacity, but the health outcome is the real prize: a less burned-out clinician who is fully present, leading to better diagnoses and stronger patient relationships.

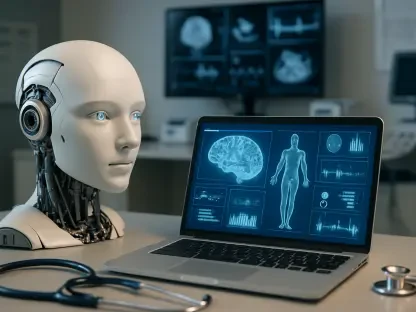

The article highlights a “trust barrier” with clinicians. Beyond just providing a rationale, what specific steps in model governance and AI explainability are most effective in getting practitioners to pivot from individual experience to these new evidence-driven tools? Please share a success story.

This is the central challenge. Doctors are trained to rely on their expertise, and a “black box” recommendation feels like an affront to that. The key is to transform the AI from an opaque oracle into a transparent consultant. For instance, in an oncology department, we introduced a diagnostic tool that analyzes scans for potential malignancies. When it flagged a subtle anomaly, it didn’t just output a probability score. It visually highlighted the specific cluster of cells, showed three other anonymized cases with similar patterns that were confirmed malignancies, and referenced the specific statistical features in the image that led to its conclusion. This level of explainability, backed by rigorous model governance that tracks data lineage and performance, was the game-changer. I watched a senior radiologist, initially very skeptical, start using it to double-check his own reads on complex cases. He said it was like having a junior resident who had studied a million scans, tirelessly pointing out things that deserved a second look. That’s how you build trust—not by replacing judgment, but by augmenting it with clear, verifiable evidence.

AlphaFold’s impact on drug discovery was a major breakthrough. Moving beyond protein folding, how are other deep learning algorithms, like transformers or CNNs, fundamentally altering the economics of innovation in other areas of research and development? What are the key metrics for success?

AlphaFold was a monumental proof of concept, and that same energy is now rippling through the entire R&D pipeline. Transformers, for example, are not just for language; they are exceptional at understanding sequences, whether it’s a sentence or a chemical compound. Researchers are now using them to digitally screen millions of potential drug molecules against a specific disease target, predicting their efficacy and potential side effects before a single one is synthesized in a lab. This completely flips the economics of innovation on its head. Instead of the traditional, wildly expensive, and often futile process of trial-and-error in a wet lab, you can focus your resources on a handful of highly promising candidates. The key metrics for success have shifted. We’re now measuring the reduction in the “lab-to-market” cycle time, the increase in the success rate of candidates entering clinical trials, and the significant decrease in upfront R&D costs. It’s about failing faster, cheaper, and more intelligently in the digital realm to succeed more quickly in the real world.

You described algorithms as a “second set of eyes” for radiologists in high-pressure settings like stroke units. From a practical standpoint, how are these tools integrated into the workflow to prioritize critical cases without disrupting the established clinical process?

In an emergency setting like a stroke unit, you can’t afford any friction; the integration has to be seamless and almost invisible. Here’s how it works: when a patient comes in for a CT scan, the image is automatically fed into the AI system the moment it’s generated. The algorithm, typically a Convolutional Neural Network, analyzes the scan in seconds. If it detects evidence of a time-sensitive condition like an acute ischemic stroke, it doesn’t just send a generic alert. It automatically pushes that patient’s scan to the very top of the radiologist’s reading queue, flagging it with a bright, unmissable priority marker. The radiologist doesn’t have to change their software or their process; their worklist is simply re-sorted in real time based on clinical urgency. The algorithm isn’t making the final call, but it’s acting as a hyper-efficient triage nurse, ensuring that the most critical cases get expert human attention without a moment’s delay.

The text points out a shift from patients using Google to verified AI health assistants. How do these new platforms technically ensure their information is guideline-based and personalized? Can you describe the step-by-step process for developing one for a chronic condition like diabetes?

Creating a trusted AI health assistant is a meticulous process, far removed from the “wild west” of a web search. For a condition like diabetes, the first step is building a walled garden of information. We don’t train the model on the open internet. Instead, we create a curated dataset composed exclusively of verified, guideline-based sources: peer-reviewed medical journals, publications from diabetes associations, and national health guidelines. The LLM is then trained on this specific corpus to learn how to translate complex medical jargon into simple, empathetic language. The personalization layer comes next. With patient consent, the app securely connects to their health data—like a continuous glucose monitor or their EHR. Now, the assistant can offer context-aware support. It might say, “I see your blood sugar spiked after breakfast. Let’s review some meal options that might help stabilize it.” It’s a journey from a generic information source to a personalized, evidence-driven partner in care.

Regulations like HIPAA and GDPR pose significant data-sharing challenges. From an engineering perspective, what are the most promising techniques for training robust global models while respecting these strict privacy boundaries, and what are the main trade-offs you have to consider?

This is the tightrope we walk every day. The most promising technique by far is federated learning. The old model was to bring all the data to a central server for training, which is a non-starter under GDPR or HIPAA. In federated learning, we flip that: we bring the model to the data. A hospital in Europe can use its own private patient data to train a version of the model locally, behind its firewall. No personal data ever leaves the institution. Only the mathematical learnings from the model—anonymized updates and weights—are sent back to a central aggregator to be combined with learnings from other hospitals. This allows us to build a robust, global model that benefits from diverse datasets without ever compromising patient privacy. The main trade-off is complexity and a potential slight dip in performance compared to having all the data in one place. It’s a far more difficult engineering challenge, but it’s the ethical and legal imperative for building scalable AI in healthcare.

What is your forecast for algorithmic healthcare?

My forecast is for a future of quiet integration. The initial hype of “AI replacing doctors” will fade, and instead, algorithms will become a deeply embedded, almost invisible, part of the healthcare infrastructure. Think of it as the central nervous system of a hospital—automating administrative tasks, acting as a tireless second set of eyes for diagnostics, and personalizing patient communication. The most profound shift will be from reactive to proactive care. These systems will get better at identifying at-risk patients and flagging subtle warning signs long before a condition becomes acute. Healthcare will become less about heroic interventions and more about continuous, quiet management. Full autonomy is still a distant goal, but this seamless collaboration between human expertise and algorithmic intelligence will become the new, and much higher, standard of care.