In the quiet hours of the night, long after clinics have closed and phone lines have gone unanswered, millions of people are turning to an unlikely source for medical guidance: artificial intelligence. This massive, unmonitored migration toward AI for healthcare advice represents one of the most significant shifts in modern patient behavior, with over 40 million individuals now using ChatGPT for health-related queries every single day. Driven by deep-seated frustrations with a traditional healthcare system perceived as inaccessible and prohibitively expensive, this trend has evolved far beyond simple symptom checking. Users are now asking AI to interpret complex lab results, navigate Byzantine insurance policies, and even help them prepare for appointments with human doctors. While some view this as a powerful form of patient empowerment—a digital ally in a confusing landscape—it has ignited a fierce debate among medical professionals and regulators about the profound safety implications of outsourcing health decisions to an unregulated algorithm, blurring the lines between information and diagnosis in an unprecedented way.

The Digital Shift in Patient Care

A Response to Systemic Failures

The widespread adoption of AI as a medical resource is not merely a testament to technological advancement; it is a direct symptom of systemic cracks in the foundation of traditional healthcare, particularly within the United States. Escalating costs, prolonged wait times for appointments, and a growing erosion of public trust have created a vacuum that AI is rapidly filling. The data starkly illustrates this reality: an overwhelming seven out of every ten healthcare-related conversations on the platform take place outside of standard clinic hours, with usage surging overnight and on weekends when access to professional medical help is at its lowest. This pattern suggests that for many, AI is not a first choice but a last resort. It has become a de facto after-hours clinic and an immediate source of information for those who feel left behind by a system that is often slow to respond, difficult to navigate, and financially burdensome, forcing patients to seek alternative avenues for care and guidance.

This reliance on AI is even more pronounced in geographically isolated and underserved communities, transforming the technology from a convenience into a critical lifeline. In rural areas often described as “hospital deserts”—defined as locations more than a 30-minute drive from the nearest hospital—AI has become an essential tool for preliminary health information. States like Wyoming and Oregon, which have seen numerous rural hospital closures in recent years, are at the forefront of this trend, generating nearly 600,000 healthcare-related messages to the platform on a weekly basis. For residents in these regions, AI serves as a bridge across significant gaps in healthcare access, providing instant answers to questions that might otherwise require hours of travel or a long wait for a mobile clinic. As a result, the digital tool is no longer a supplementary resource but a primary point of contact for countless individuals navigating their health concerns in the absence of readily available professional care.

Understanding Patient Queries

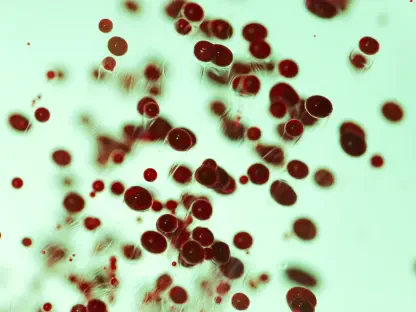

An in-depth analysis of user behavior reveals that individuals are leveraging AI for a broad and increasingly complex spectrum of health-related tasks. According to a survey commissioned by OpenAI, the most frequent uses include checking symptoms (55%), seeking to understand complex medical terminology (48%), and exploring potential treatment options (44%). However, the scope of these queries has expanded significantly beyond these basic functions. A growing number of users are now inputting data from their lab reports to ask for interpretations, requesting summaries of dense medical studies, or seeking advice on how to effectively communicate their symptoms to a physician. This evolution indicates a deepening trust and reliance on the platform’s capabilities to distill and simplify intricate health information. The AI is being treated less like a search engine and more like a preliminary consultant, a digital assistant capable of translating the often-intimidating language of medicine into understandable terms for the average person.

From the perspective of its developers, this explosive growth in health-related usage is framed as a positive step toward patient empowerment. An OpenAI report from January 2024, titled ‘AI as a Healthcare Ally,’ casts the technology as a vital navigation tool designed to help users navigate an increasingly convoluted and often inaccessible healthcare system. The report argues that by providing clear, on-demand information, AI can help demystify medicine, enabling patients to become more informed and active participants in their own care. This perspective positions the platform not as a replacement for doctors but as a supplementary resource that prepares patients for more productive conversations with their healthcare providers. It functions as an interpreter and an organizer, helping individuals make sense of their health journey before they even step into a clinic, thereby fostering a more collaborative and efficient relationship between patient and physician.

The Professional and Regulatory Landscape

Adoption within the Medical Community

The trend of consulting AI for medical information is not confined to patients; a significant and growing number of healthcare professionals are also integrating these tools into their daily workflows. The American Medical Association reported a sharp increase in AI usage among U.S. physicians, with 66% utilizing the technology in 2024, a substantial leap from 38% just a year prior. Clinicians are primarily turning to AI to combat the overwhelming administrative burden that characterizes modern medicine. They use it for tasks such as drafting and summarizing patient notes, generating referral letters, and communicating with insurance companies, thereby freeing up valuable time to focus on direct patient care. Furthermore, some are using it as a diagnostic support tool, inputting complex symptom patterns to generate a list of potential differential diagnoses, particularly in challenging or unusual cases where a second, data-driven perspective can be beneficial.

This professional adoption extends across the healthcare spectrum, with nearly half of all nurses now reporting weekly use of AI tools to manage their demanding responsibilities. In understaffed hospitals and clinics, where nurses are often stretched thin, AI has become an indispensable assistant for streamlining non-clinical tasks. These tools help in creating patient education materials, summarizing shift handovers, and organizing care schedules, which contributes to greater efficiency and reduces the risk of burnout. The integration of AI by both doctors and nurses highlights a broader industry acknowledgment of its potential to alleviate systemic pressures. By automating routine and time-consuming administrative work, these technologies allow skilled medical professionals to operate at the top of their licenses, dedicating more of their expertise and energy to the nuanced, human-centric aspects of patient treatment and care.

Navigating the Uncharted Regulatory Waters

Despite its growing utility for both patients and professionals, the rapid integration of AI into healthcare has created a significant and troubling gray area. The central point of contention revolves around the increasingly blurred distinction between providing generalized health information and offering what could be interpreted as an informal medical diagnosis. While AI platforms are programmed with disclaimers that they do not offer medical advice, the sophisticated and personalized nature of their responses can easily lead users to perceive them as diagnostic. This ambiguity raises profound questions about accountability and safety. If an AI provides misleading information that results in a delayed diagnosis or incorrect self-treatment, determining liability is a complex challenge. This lack of a clear regulatory framework leaves a vacuum where a powerful, influential technology operates without the rigorous oversight and safety standards applied to virtually every other aspect of the medical field.

The dialogue surrounding AI in healthcare has fundamentally shifted. The question is no longer whether people will use these tools for medical guidance, as their mass adoption has already made that a certainty. Instead, the critical debate has become about establishing the necessary guardrails and regulatory frameworks to govern how far this reliance can safely extend. Policymakers, medical boards, and technology companies face the urgent task of defining the ethical and legal boundaries for AI’s role in medicine. This involves creating clear standards for data privacy, accuracy, and transparency in AI-generated responses, as well as establishing protocols for when and how these tools should direct users to human professionals. The challenge is to harness the undeniable potential of AI to democratize health information without compromising the safety and well-being of the millions who have already come to depend on it.