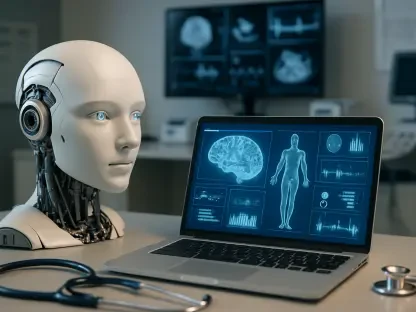

The healthcare industry stands at a pivotal moment where nearly 30% of clinical questions lack sufficient evidence to guide decisions, leaving physicians grappling with uncertainty in critical moments, especially in specialized fields like geriatrics and oncology. This staggering evidence gap poses a significant challenge to delivering personalized patient care. Enter agentic AI, a cutting-edge technology that promises to transform how real-world evidence (RWE) is generated and applied. This roundup dives into diverse perspectives from industry leaders, technologists, and healthcare providers at Stanford Health Care to uncover how agentic AI, spearheaded by innovative tools like Evidence Agent from Atropos Health, is reshaping clinical decision-making. The goal is to compile insights, compare differing viewpoints, and highlight the potential of this technology to bridge long-standing gaps in medical research.

Unpacking the Promise of Agentic AI in Healthcare

Agentic AI represents a leap forward in healthcare technology by autonomously generating tailored evidence from vast datasets like electronic health records (EHRs). At Stanford Health Care, this innovation is being integrated into daily practice, offering physicians real-time insights for patient-specific queries. Many in the industry view this as a game-changer, especially for under-researched medical areas where traditional studies are scarce. The ability to produce actionable data on demand is seen as a vital step toward enhancing treatment outcomes in complex cases.

However, not all opinions align on the immediate benefits. Some healthcare professionals express caution, pointing out that while the technology holds immense potential, its novelty means long-term impacts remain untested. Concerns linger about whether AI-generated evidence can consistently match the depth and rigor of peer-reviewed studies. This divide in perspective sets the stage for a deeper exploration of how agentic AI is being received and implemented in real-world settings.

Revolutionizing Clinical Decisions with Personalized Evidence

Tailoring Insights for Niche Medical Fields

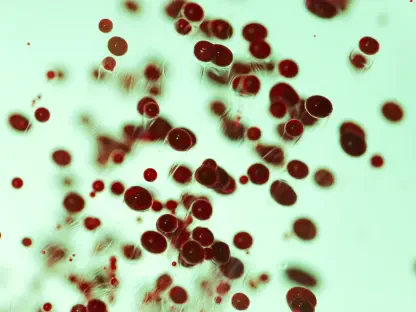

At the forefront of agentic AI’s impact is its capacity to address specific evidence gaps by pulling data directly from EHRs at institutions like Stanford Health Care. Industry innovators argue that this technology excels in delivering personalized RWE for specialties such as hematology, where conventional research often falls short. The real-time nature of these insights allows clinicians to make informed decisions swiftly, potentially improving patient outcomes in time-sensitive scenarios.

Skeptics, however, highlight the risk of data inaccuracies when evidence is generated at such a rapid pace. Some healthcare providers worry that without stringent validation processes, there could be instances where AI outputs lead to suboptimal treatment choices. This concern underscores a broader debate on balancing speed with reliability in clinical environments where stakes are exceptionally high.

A middle ground emerges from discussions among technologists who advocate for iterative improvements in AI algorithms. They suggest that ongoing feedback from clinical users can help refine the technology, ensuring that personalized evidence becomes both faster and more dependable over time. This collaborative approach is gaining traction as a way to mitigate initial shortcomings while maximizing benefits.

Enhancing Physician Efficiency through Workflow Integration

The seamless embedding of agentic AI tools into existing clinical systems at Stanford has garnered praise for reducing administrative burdens. Many physicians report that having evidence proactively documented within familiar platforms minimizes disruptions during patient interactions. This integration is often described as a significant boost to efficiency, allowing more focus on care rather than clerical tasks.

On the flip side, some professionals caution against over-reliance on automated systems. There’s a fear that excessive dependence might erode critical thinking skills among clinicians if AI recommendations are accepted without scrutiny. This perspective emphasizes the importance of maintaining a balance where technology supports, rather than supplants, human judgment.

Feedback from early adopters at Stanford suggests a practical solution lies in continuous training programs. By educating healthcare staff on the strengths and limitations of AI tools, institutions can foster a culture of informed usage. Such initiatives are seen as essential to ensuring that workflow enhancements translate into tangible improvements without compromising decision-making autonomy.

Scaling Evidence Generation to New Heights

Redefining the Pace of Medical Research

The scalability of agentic AI, with the ability to produce thousands of novel studies in mere minutes, has sparked excitement among research advocates. This rapid output is often positioned as a potential rival to traditional medical literature databases, offering a faster way to address unanswered clinical questions. Proponents argue that this could democratize access to evidence, especially for smaller institutions lacking extensive research resources.

Contrasting views come from academic circles that question the credibility of AI-generated studies compared to established research methods. Critics point out that speed should not come at the expense of methodological rigor, as the scientific community may hesitate to accept findings lacking traditional peer review. This tension highlights a key challenge in redefining standards for evidence in the AI era.

A balanced perspective suggests that hybrid models, combining AI efficiency with human oversight, could pave the way forward. Some industry voices propose that integrating agentic AI outputs into existing validation frameworks might help build trust over time. This approach is viewed as a stepping stone to reshaping how medical literature is created and consumed globally.

Building Trust with Transparency and Safeguards

Trust remains a cornerstone issue in the adoption of agentic AI, with many stakeholders commending the use of reliability indicators like color-coded badge systems to signal the strength of AI responses. Such transparency mechanisms are widely appreciated for helping clinicians assess whether evidence is robust enough for treatment decisions. This feature is often cited as a critical factor in fostering confidence among users.

Yet, concerns about data privacy persist, with some healthcare leaders stressing the need for airtight security measures. Even with assurances that data remains behind institutional firewalls, there’s apprehension about potential breaches in sensitive environments. These worries reflect a broader industry dialogue on safeguarding patient information amidst technological advancements.

A consensus is forming around the idea that trust can be bolstered through clear communication of AI processes and limitations. Technologists and providers alike advocate for detailed documentation of how evidence is generated, coupled with regular audits to ensure compliance with privacy standards. This proactive stance is seen as vital to encouraging wider acceptance in cautious medical settings.

Key Lessons from Stanford’s Experience with Agentic AI

Stanford Health Care’s journey with agentic AI offers valuable takeaways for other institutions eyeing similar technologies. A primary lesson is the transformative power of personalized RWE in filling evidence voids, particularly when integrated smoothly into clinical workflows. Many observers note that this dual focus on customization and usability has directly contributed to better decision-making capabilities among physicians.

Another critical insight is the necessity of prioritizing data security from the outset. Industry feedback underscores that robust protections are non-negotiable when handling sensitive patient information through AI systems. This principle is often highlighted as a prerequisite for scaling such innovations across diverse healthcare landscapes.

Practical strategies also emerge from this experience, with recommendations for starting with small-scale pilot programs to test AI tools in controlled settings. Partnerships with technology developers are frequently suggested as a way to tailor solutions to specific institutional needs. These actionable steps are viewed as accessible entry points for organizations aiming to harness the benefits of agentic AI without overwhelming existing systems.

Reflecting on the Path Forward

Looking back, the exploration of agentic AI’s role in clinical evidence at Stanford Health Care revealed a landscape rich with innovation and tempered by caution. The insights gathered from various industry perspectives painted a picture of immense potential to revolutionize healthcare decision-making through personalized, scalable evidence generation. As discussions unfolded, the importance of trust and integration stood out as pivotal to successful adoption.

Moving ahead, healthcare institutions are encouraged to take deliberate steps, such as initiating pilot projects to evaluate agentic AI’s impact in their unique contexts. Collaborating with technology pioneers to customize solutions offers a promising avenue for overcoming initial hurdles. Additionally, investing in clinician education emerges as a crucial measure to ensure that AI tools enhance, rather than overshadow, professional expertise. Staying engaged with evolving research and industry developments remains a vital consideration for navigating this dynamic field.