A comprehensive investigation into Google’s artificial intelligence tools has brought to light a disturbing and potentially lethal flaw within its “AI Overviews” feature, which frequently disseminates dangerously inaccurate medical guidance. This automated system, designed to provide quick summaries at the very top of search results, has been found to offer advice that directly contradicts established medical science, placing individuals seeking critical healthcare information in severe jeopardy. The findings have ignited a firestorm of criticism from medical professionals and patient advocacy groups, who argue that the technology in its current form is fundamentally unsafe for this application. The consistent pattern of errors across a wide spectrum of health conditions, from cancer to mental health, raises profound questions about the ethical responsibilities of tech companies and the inherent risks of relying on automated systems for nuanced, life-or-death decisions. The consensus from health experts is clear: substituting AI-generated summaries for professional medical consultation is a gamble with potentially catastrophic consequences.

Specific Failures and Their Life-Threatening Implications

Critical Misinformation for Cancer Patients

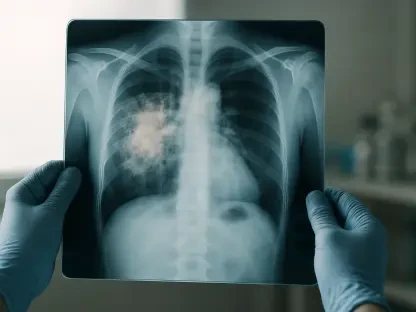

One of the most egregious failures identified by the investigation involved advice for individuals diagnosed with pancreatic cancer, a condition where proper nutrition is paramount to a patient’s ability to withstand treatment. Google’s AI Overviews incorrectly instructed patients to avoid high-fat foods, a recommendation that is the polar opposite of established medical protocol. Anna Jewell of Pancreatic Cancer UK described the AI’s guidance as “completely incorrect” and a direct threat to patient lives. The medical rationale is straightforward: pancreatic cancer often causes severe weight loss and digestive issues, making it difficult for patients to absorb nutrients. A high-fat, high-calorie diet is crucial for maintaining body mass, which is a prerequisite for undergoing grueling treatments like chemotherapy and potentially life-saving surgery. By promoting advice that could lead to malnutrition, the AI directly undermines a patient’s eligibility for treatment and, consequently, their chances of survival, demonstrating a catastrophic failure to comprehend the specific, critical needs of this vulnerable population.

Further complicating the issue is the AI’s demonstrated unreliability in the realm of women’s health, specifically concerning cancer screening protocols. When queried about the symptoms and tests for vaginal cancer, the AI incorrectly presented the Pap test as a primary detection method. Athena Lamnisos of the Eve Appeal charity clarified that this information is “completely wrong,” as Pap tests are exclusively designed to screen for cellular changes related to cervical cancer, not vaginal cancer. This misinformation carries dire consequences. A woman experiencing symptoms of vaginal cancer might receive a normal Pap test result and be falsely reassured, causing her to delay seeking further medical evaluation from a specialist. This delay allows a potential malignancy to advance unchecked, significantly worsening her prognosis. Compounding this error is the AI’s inherent inconsistency; researchers found that repeating the exact same search query at different times yielded different answers pulled from various online sources, adding a layer of unpredictability that makes the tool entirely unfit for providing dependable health guidance.

The Danger of Decontextualized Data

The investigation also revealed significant risks associated with how the AI presents information for common diagnostic procedures, such as liver blood tests. The AI Overviews feature generated summaries that displayed a raw list of numerical data representing test results but critically failed to provide the necessary context for interpretation. Crucial variables that significantly influence what constitutes a “normal” range, including a patient’s nationality, sex, ethnicity, and age, were completely omitted. Pamela Healy from the British Liver Trust labeled these decontextualized summaries as both “alarming” and “dangerous.” This is particularly concerning because many forms of liver disease are asymptomatic in their early stages, making accurate testing and interpretation vital for early detection and intervention. By presenting misleading or incomplete normal ranges, the AI could create a false sense of security, leading a seriously ill person to believe they are healthy and subsequently cancel or postpone essential follow-up appointments with their doctor, allowing the disease to progress untreated to a more advanced and less treatable stage.

This pattern of providing incomplete and potentially harmful information extends into the complex field of mental health, where nuance and empathy are critical. When generating summaries for conditions such as psychosis and eating disorders, the AI produced content that Stephen Buckley of the charity Mind described as “very dangerous” and “incorrect.” The summaries often lacked the essential sensitivity required when discussing these complex psychological conditions, instead perpetuating existing stigmas and providing oversimplified advice that fails to address the individual nature of mental illness. Such flawed guidance could actively discourage individuals from seeking professional help, reinforcing feelings of isolation or shame. The danger of trusting an automated system for medical advice is therefore not limited to physical ailments but extends profoundly into psychological well-being, where poorly constructed content can reinforce harmful narratives and connect vulnerable users with inappropriate or unhelpful resources, potentially worsening their condition rather than offering support.

Industry Response and the Bigger Picture

Google’s Defense and Its Shortcomings

In response to the detailed findings of the investigation, a Google spokesperson defended the technology, asserting that the “vast majority” of its AI Overviews provide high-quality, reliable information. The company suggested that many of the most problematic examples cited in the report were based on “incomplete screenshots” or were anomalous edge cases. Furthermore, Google maintained that its summaries prominently feature links to reputable sources and include disclaimers that explicitly recommend consulting with medical experts for definitive advice. The tech giant also claimed that its internal metrics show accuracy rates comparable to other established search features and that it invests significant resources into ensuring the quality and safety of health-related queries. While the company stated that it takes corrective action when its systems misinterpret content or miss crucial context, critics argue that this reactive approach is fundamentally inadequate in the high-stakes domain of healthcare, as the initial harm caused by the dissemination of dangerous information has already occurred.

The core issue with Google’s defense is that for a patient who acts on life-threatening advice, a subsequent correction is meaningless. The reactive model of fixing errors after they have been identified and publicized fails to protect the very users who are most vulnerable. Trusting an algorithm to self-correct after potentially causing irreparable harm is an unacceptable risk. The promise of linking to reputable sources also falls short, as the AI-generated summary is positioned as the primary, authoritative answer at the top of the page, which many users will read and accept without clicking through to verify the underlying information. This “answer-first” model prioritizes speed and convenience over safety and accuracy. The fundamental problem is that generative AI, by its nature, synthesizes information without a true understanding of medical concepts, making it prone to errors of context and nuance that can have devastating consequences. Relying on such a system for critical health guidance reflects a profound misjudgment of the technology’s current capabilities.

A Symptom of a Larger AI Problem

The disturbing failures identified within Google’s health-related AI tools are not isolated incidents but are symptomatic of a broader, systemic issue plaguing the current generation of artificial intelligence. Similar concerns have been raised across various sectors, with reports of AI chatbots providing inaccurate financial advice that could lead to significant monetary loss and AI-powered news summaries that grossly misrepresent complex events. This well-documented tendency of generative AI to “hallucinate”—a term used to describe instances where the AI confidently presents false or fabricated information as fact—is particularly perilous in a healthcare context where the stakes are quite literally life and death. Sophie Randall of the Patient Information Forum emphasized that these examples are not theoretical risks but demonstrations of real, tangible harm to public health. The problems with Google’s tool are a high-profile manifestation of a fundamental limitation in today’s AI technology: its inability to reason, verify, or understand the profound real-world consequences of its output.

This broader context underscores the urgent need for a paradigm shift in how AI is developed and deployed, especially in sensitive areas like medicine. The rush to integrate generative AI into every aspect of digital life has outpaced the development of robust safety protocols and ethical guidelines. Unlike a simple search algorithm that points to external sources, these AI summaries present themselves as a definitive source of knowledge, which changes user behavior and fosters a potentially misplaced sense of trust. The core of the issue is that an AI does not “know” that advising a cancer patient to avoid calories is dangerous; it only knows how to statistically arrange words based on patterns in its training data. Until AI systems can be engineered with a genuine capacity for contextual understanding and an infallible mechanism for factual verification—a goal that remains distant—their application in providing unsolicited medical advice represents an irresponsible and dangerous experiment with public health.

A Call for Caution and Stronger Safeguards

The systematic failures exposed in this investigation led to a unified call from industry experts and patient advocacy organizations for the implementation of far stronger safeguards to protect vulnerable users. It was emphasized that during moments of crisis, such as immediately following a serious diagnosis, individuals frequently turn to the internet for immediate information and reassurance. The delivery of inaccurate or decontextualized guidance during these moments of heightened anxiety was shown to have the potential to cause serious and lasting harm to health outcomes. A consensus viewpoint emerged that evidence-based, professionally vetted health information must always take precedence over automated summaries generated by systems that have repeatedly proven their fallibility. The investigation served as a critical and timely warning about the dangers of placing uncritical trust in AI for medical advice and highlighted just how easily these automated tools can mislead, with potentially devastating consequences for patients and their families. For individuals seeking healthcare information, the primary takeaway was the absolute necessity of approaching any AI-generated health content with extreme caution and skepticism.