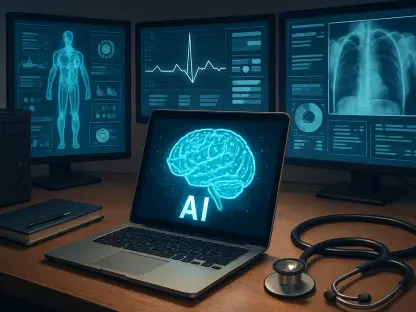

The advent of Large Language Models (LLMs) has revolutionized various sectors, including healthcare. These models are now integral to tasks such as diagnostic decision-making, patient management, and clinical reporting. However, their real-world effectiveness in clinical settings has not been thoroughly examined. While LLMs show impressive performance in controlled settings like the United States Medical Licensing Examination (USMLE), their practical utility in real-world environments remains underexplored. This article introduces MedHELM, a new benchmarking framework designed to fill this evaluation gap.

Current Use of LLMs in Medicine

LLMs are increasingly employed across numerous medical practices. They assist in diagnostic decision-making, streamline patient management, enhance clinical reporting, and support medical research. Despite their impressive performance in controlled environments like the United States Medical Licensing Examination (USMLE), their practical utility in real-world clinical settings remains underexplored. Traditional evaluation methods for LLMs often rely on synthetic assessments, which fail to capture the intricacies of actual clinical practice. These methods typically focus on single metrics and structured knowledge exams, which do not reflect the dynamic and multifaceted nature of real-world medical scenarios. Additionally, the homogeneity of publicly available datasets limits the models’ ability to generalize across different medical specialties and patient demographics.

A comprehensive evaluation of LLMs in real-world medical scenarios is imperative for their integration into healthcare systems. Synthetic assessments, widely used in current practices, lack the capacity to encompass the diversity and complexity of real clinical situations. Publicly available datasets, often homogenous, restrict the models’ ability to operate effectively across various medical disciplines and diverse patient populations. This gap necessitates a rigorous and realistic benchmarking framework that captures the multifaceted nature of live clinical environments and provides accurate evaluations of model performance, ultimately leading to reliable AI integration in healthcare.

Introduction of MedHELM

To address these limitations, researchers developed MedHELM, a comprehensive benchmarking framework designed to systematically evaluate LLMs. MedHELM builds on Stanford’s Holistic Evaluation of Language Models (HELM) and evaluates LLMs across five primary areas: Clinical Decision Support, Clinical Note Generation, Patient Communication and Education, Medical Research Assistance, and Administration and Workflow. This holistic approach ensures a thorough assessment of key healthcare applications, providing a more accurate picture of LLM capabilities in real-world clinical settings.

MedHELM includes 22 subcategories and 121 specific medical tasks, ensuring a thorough assessment of key healthcare applications. This structured approach enhances the scope of AI evaluation in clinical settings, moving beyond theoretical knowledge testing to practical utility. By including a wide range of tasks, MedHELM can evaluate LLMs on their practical effectiveness in dynamic and complex medical environments. This framework aims to bridge the gap between synthetic evaluations and real-world applications, offering a robust and multidimensional analysis of LLMs in healthcare.

Dataset Infrastructure and Evaluation Methodology

MedHELM’s evaluations are supported by 31 datasets, including 11 newly developed medical datasets and 20 pre-existing clinical records. These datasets span a wide range of medical domains, ensuring that the assessments reflect real-world healthcare challenges rather than contrived scenarios. The utilization of such diverse and comprehensive data sources allows MedHELM to emulate real-life medical complexities and provide a more accurate evaluation of LLM performance in clinical settings. The incorporation of both newly developed and pre-existing datasets ensures that the evaluation framework is both current and extensive.

The evaluation process involves several steps: context definition, prompting strategy, reference responses, and scoring metrics. Context definition specifies the data segment that the model must analyze, ensuring relevant and precise assessments. The prompting strategy provides predefined instructions for model behavior, guiding the LLMs to operate within specified parameters. Reference responses offer clinically validated outputs for comparison, serving as benchmarks to measure model accuracy. Scoring metrics include exact match, classification accuracy, BLEU, ROUGE, and BERTScore for text similarity evaluations. This meticulous process ensures that model evaluations are comprehensive and reflect real clinical accuracy, providing actionable insights into their capabilities.

Assessment Outcomes

Six LLMs of varying sizes were assessed using MedHELM, revealing distinct strengths and weaknesses based on task complexity. Larger models like GPT-4.0 and Gemini 1.5 Pro excelled in medical reasoning and computational tasks, showing high accuracy in clinical risk estimation and bias identification. These models demonstrated superior performance in tasks that required intricate reasoning and advanced computations, indicating their potential for complex medical applications. Mid-sized models, such as Llama-3.3-70B-instruct, performed well in predictive healthcare tasks like hospital readmission risk prediction, illustrating their utility in specific predictive tasks within clinical settings.

However, smaller models like Phi-3.5-mini-instruct and Qwen-2.5-7B-instruct struggled with domain-intensive knowledge tests, particularly in areas like mental health counseling and complex medical diagnosis. These findings highlight the varying capabilities of LLMs depending on their size and the complexity of the tasks. Smaller models exhibited limitations in handling intricate and specialized medical knowledge, underscoring the need for continuous refinement and targeted improvements. The assessment outcomes underscore the importance of choosing the right model size and complexity for specific medical applications to maximize efficiency and accuracy.

Challenges and Limitations

While some models performed exceptionally well in certain areas, there were notable challenges and limitations. Some models failed to answer medically sensitive questions or provide responses in the desired format, negatively impacting their performance. These issues highlight the difficulties models face in accurately processing and responding to nuanced medical queries, which is critical for real-world clinical applications. Additionally, conventional NLP scoring metrics, such as BERTScore-F1, often overlooked real clinical accuracy, leading to negligible performance disparities between models.

These limitations underscore the need for more stringent evaluation criteria, incorporating fact-based scoring and explicit clinician feedback to better reflect clinical usability. Improving these aspects is crucial for enhancing the reliability and effectiveness of LLMs in real-world healthcare settings. By addressing these challenges, MedHELM can further refine the evaluation process and provide more reliable assessments, ultimately contributing to the development of more accurate and effective LLMs for healthcare applications.

Main Contributions of MedHELM

The advent of Large Language Models (LLMs) has significantly transformed various sectors, including healthcare. These sophisticated models are now key players in tasks like diagnostic decision-making, patient management, and clinical reporting. Despite their promising capabilities, the practical effectiveness of LLMs in real-world clinical settings has not been thoroughly evaluated. While LLMs have shown remarkable performance in controlled environments such as the United States Medical Licensing Examination (USMLE), their real-world application remains largely underexplored. This gap in practical assessment highlights the need for a comprehensive evaluation framework. Addressing this need, the article introduces MedHELM, a novel benchmarking framework specifically designed to evaluate LLMs in clinical settings. MedHELM aims to bridge the gap by providing a rigorous method for assessing the real-world utility of LLMs in healthcare, ensuring these models are not only impressive in theory but also effective in practice.