A recently surfaced patent filing reveals Apple’s ambitious exploration into integrating brain-monitoring technology directly into consumer earbuds, a development that could fundamentally alter the landscape of personal health and wearable computing. The patent, designated US20230225659A1, details a system for embedding electroencephalography (EEG) biosensors into a device akin to AirPods. This innovative approach aims to transform a ubiquitous audio accessory into a sophisticated neural monitoring tool capable of capturing brain activity non-invasively. The move signifies a major step toward a future where everyday technology becomes deeply integrated with human biology, offering unprecedented insights into our cognitive and physiological states while simultaneously raising complex questions about privacy and ethics in a hyper-connected world. This initiative is poised to revolutionize not only personal health tracking but also the very nature of human-computer interfaces.

The Foundation of In-Ear Neural Sensing

The core of Apple’s innovation lies in its patented method for detecting and interpreting the brain’s electrical activity through the ear canal, which represents a significant departure from conventional EEG systems that typically rely on cumbersome and conspicuous headgear fitted with a network of electrodes, making them impractical for continuous, everyday use. By integrating these sensors seamlessly into the familiar and socially acceptable form factor of its earbuds, Apple’s approach could allow for uninterrupted, non-invasive monitoring of a user’s neural state during a wide range of daily activities. This strategic placement aims to make brainwave monitoring as common and effortless as tracking steps or heart rate, democratizing access to a technology once confined to clinical settings and research laboratories. The potential is to create a constant stream of valuable neurological data without requiring any change in user behavior or comfort.

A critical technical advancement outlined in the patent is the concept of a “dynamic selection of electrodes.” This intelligent system is specifically engineered to overcome the practical hurdles inherent in wearable technology, such as the anatomical variations in users’ ear canals and the signal disruptions caused by movement. The system would be capable of identifying and switching between multiple electrode contact points in real-time, actively searching for the optimal sensor configuration to maintain a clear and stable EEG signal. This adaptability is paramount to transforming in-ear EEG from a promising but fragile concept into a viable and reliable technology for the mass market. It addresses the fundamental challenge of signal integrity, ensuring that the data collected is accurate enough for meaningful analysis, thereby moving the technology from the controlled environment of a lab to the unpredictable reality of daily life.

A Multimodal Approach to Biosensing

Apple’s vision, as detailed in the patent filings, extends far beyond basic EEG monitoring to encompass an integrated, multimodal biosensing platform. By combining brainwave data with information from other advanced sensors, such as electromyography (EMG) for detecting muscle activity and electrooculography (EOG) for tracking eye movements, the earbuds could capture a rich, holistic picture of a user’s physiological state. Furthermore, the integration of sensors for measuring heart rate and body temperature would effectively turn the earbuds into comprehensive health and wellness monitors. This fusion of diverse data streams would provide a much deeper and more contextualized understanding of a user’s condition than any single metric could offer, creating a powerful tool for both personal insight and potential medical diagnostics. The goal is to build a complete physiological profile from a single, unobtrusive device.

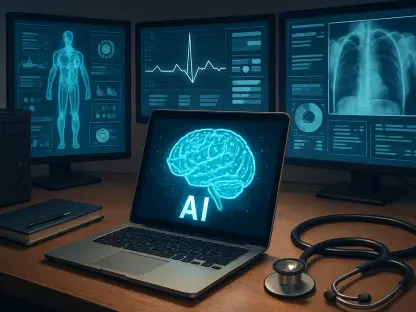

This wealth of incoming data would be interpreted by advanced artificial intelligence and machine learning models, forming the cognitive engine of the system. A key area of research highlighted in the analysis points to Apple’s work on AI that can learn an individual’s unique brain activity patterns without requiring pre-annotated data from a clinical setting. This self-learning capability is crucial for delivering personalized neural insights and predictive health alerts, tailoring the device’s functionality to the specific neuro-signature of each user. This progression is a clear and methodical evolution of Apple’s wearable strategy, building upon existing features like the skin-detect sensors in current AirPods. In essence, the company is systematically advancing its wearables from simple accessories into powerful and intelligent neural interfaces that can learn, adapt, and provide proactive guidance.

Redefining Personal Health and User Interfaces

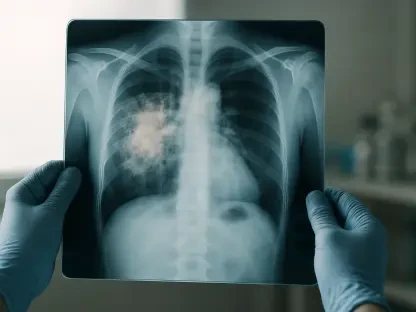

The potential applications of this technology in the healthcare domain are profound and far-reaching, promising a new era of preventative and personalized medicine. Continuous, passive brain monitoring could enable the early detection of serious neurological conditions like epilepsy, with the system potentially configured to provide real-time seizure alerts to the user or emergency contacts through a connected device like an iPhone. It could also monitor for subtle changes in brainwave patterns that might indicate the onset of dementia or other neurodegenerative diseases long before clinical symptoms appear. On a daily wellness level, the earbuds could quantitatively assess stress levels, monitor sleep quality with unprecedented detail by tracking brain stages, and even provide predictive warnings for conditions like migraines by recognizing precursor neural signals. This data would seamlessly integrate into Apple’s existing Health app ecosystem, complementing metrics from the Apple Watch to provide a more complete and actionable view of user health.

Beyond its medical and wellness applications, the technology could fundamentally reshape how users interact with their digital devices. The patent opens the door to a future where brain-computer interfaces (BCIs) become a mainstream reality, allowing users to control music playback with their thoughts—for instance, issuing a mental command to skip a track or adjust the volume. In the rapidly developing context of spatial computing, brain signal and gaze tracking data from the earbuds could be fused to create a more immersive and intuitive control scheme for the Vision Pro headset, enabling navigation and interaction with a speed and fluidity that current input methods cannot match. This potential for direct neural control has sparked considerable excitement in technology communities, with widespread speculation about revolutionary new applications in gaming, productivity, and augmented reality that could redefine human-computer interaction.

Navigating the Neurotechnological Frontier

The introduction of this patent marked a potential watershed moment for consumer technology, embodying a future where personal devices were no longer just tools we commanded but partners that could understand our physiological and cognitive states. While the technology promised transformative benefits for health, wellness, and user experience, its realization was understood to be contingent upon navigating a complex maze of technical, regulatory, and ethical challenges. The prospect of mass-market brain monitoring raised serious privacy concerns, as brain data represents the ultimate form of personal information. The successful deployment of such a product depended entirely on Apple’s ability to earn and maintain public trust, ensuring that this deeply personal data was used to empower users while being protected with the most robust safeguards imaginable. The debate it ignited centered on how to balance innovation with the profound responsibility of handling the innermost workings of the human mind.