What happens when the technology poised to transform healthcare also threatens to undermine patient trust and safety? In an era where artificial intelligence (AI) is reshaping medical practice at an unprecedented pace, health systems face a critical challenge: harnessing AI’s potential while safeguarding against its risks. This dilemma sets the stage for a groundbreaking response from the American Medical Association (AMA), which has introduced a new toolkit designed to guide health systems through the complex landscape of AI adoption with clarity and accountability.

The significance of this development cannot be overstated. With AI already embedded in pilot programs for tasks like medical scribing and diagnostic support across many organizations, the absence of structured governance has left glaring gaps in oversight. Recent surveys indicate that while most health systems are experimenting with AI, only a small fraction have established robust frameworks to manage data use or vendor partnerships. The AMA’s toolkit arrives as a timely solution, addressing urgent concerns about bias, privacy violations, and erroneous AI recommendations that could jeopardize patient care.

Why AI Governance Is Healthcare’s New Frontier

The integration of AI into healthcare promises remarkable advancements, from enhancing diagnostic precision to personalizing treatment plans. Yet, this same technology carries inherent dangers, such as perpetuating biases in algorithms or exposing sensitive patient data to breaches. These risks highlight a pressing need for governance structures that ensure AI serves as a tool for good rather than a source of harm in clinical settings.

Health systems are at a crossroads, balancing innovation with ethical responsibility. Without clear guidelines, the rapid adoption of AI—evidenced by its capture of 62% of digital health venture capital, totaling nearly $4 billion in the first half of this year—could lead to unintended consequences. The AMA’s initiative steps into this void, offering a framework to navigate the uncharted territory of AI implementation while prioritizing patient safety and trust.

This push for governance isn’t merely a precaution; it’s a necessity driven by real-world stakes. As AI tools become more prevalent in decision-making processes, the potential for flawed outputs to influence patient outcomes grows. Establishing oversight now is critical to prevent mishaps and build a foundation of accountability that can sustain future technological leaps.

The Surge of AI in Healthcare and the Call for Oversight

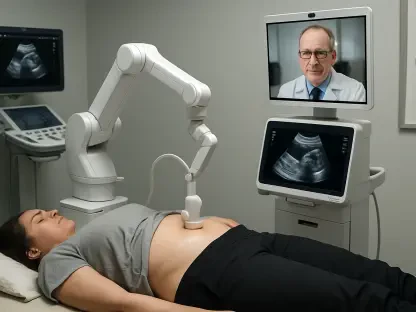

AI is no longer a theoretical concept in healthcare; it’s a tangible force reshaping operations. From assisting with medical documentation to supporting complex diagnoses, AI applications are being tested in numerous health systems. However, the enthusiasm for these tools is tempered by a stark reality: many organizations lack the strategic policies needed to manage their deployment effectively.

Survey data paints a concerning picture. Despite widespread experimentation with AI, few health systems have formalized governance around critical issues like data handling or third-party vendor assessments. This gap leaves room for errors—whether through biased algorithms or privacy lapses—that could directly impact the quality of care provided to patients.

Compounding this challenge is the sheer scale of investment driving AI’s growth. With billions of dollars fueling startups and innovations, the pressure to adopt AI quickly often overshadows the need for careful planning. The AMA’s response, in the form of a structured toolkit, aims to bridge this divide, providing health systems with the means to integrate AI responsibly amid an accelerating technological tide.

Inside the AMA’s AI Governance Toolkit

Developed in partnership with Manatt Health, the AMA’s Governance for Augmented Intelligence Toolkit offers an eight-step module tailored specifically for health systems. This resource outlines a clear path for implementation, beginning with establishing executive accountability to ensure leadership drives AI initiatives. It also delves into policy creation, defining acceptable uses like drafting research summaries while prohibiting risky actions such as entering personal health information into public AI platforms.

Beyond foundational steps, the toolkit addresses practical needs through vendor evaluation and organizational readiness. It emphasizes the importance of vetting third-party AI tools for safety and ethical compliance, while also preparing staff through comprehensive training programs. Accompanying resources—worksheets, sample forms, and example policies—make the process accessible, and physicians can even earn Continuing Medical Education credit by engaging with the materials.

Transparency and alignment with existing protocols are central to the toolkit’s design. Guidelines on data retention, informed consent, and security ensure that AI integration doesn’t clash with legal or ethical standards. By offering such a detailed roadmap, the AMA equips health systems to tackle both the operational and moral dimensions of adopting cutting-edge technology in patient care environments.

Expert Perspectives on the Imperative of AI Governance

Margaret Lozovatsky, M.D., AMA’s Chief Medical Information Officer, captures the urgency of this moment with a pointed observation: “Technology is moving very, very quickly. It’s moving much faster than we’re able to actually implement these tools, so setting up an appropriate governance structure now is more important than it’s ever been.” Her statement reflects the toolkit’s relevance as health systems race to keep pace with rapid AI advancements.

The AMA’s terminology—referring to AI as “augmented intelligence”—further underscores a key philosophy: technology should support, not supplant, healthcare professionals. This perspective is paired with advocacy for regulatory safeguards and protections against physician liability for AI-related errors, ensuring that the human element remains at the forefront of medical practice even as innovation surges.

Industry and governmental interest in AI only heightens the need for such guidance. With initiatives like the White House’s AI Action Plan promoting deregulation and testing environments for commercial AI, the toolkit serves as a vital counterbalance. It provides health systems with a way to navigate this evolving landscape, aligning innovation with accountability in a field where mistakes can carry profound consequences.

Actionable Steps for Health Systems to Adopt AI Governance

For health systems eager to integrate AI without compromising safety, the AMA toolkit lays out a practical five-step approach. First, forming a governance working group is essential to oversee policy development and ensure compliance with federal and state regulations. This team becomes the backbone of responsible AI adoption, addressing legal and ethical considerations from the outset.

Next, defining clear AI use policies is crucial, specifying permitted applications—like drafting patient communications—while explicitly banning high-risk practices, such as inputting sensitive data into unsecured tools. Equally important is the implementation of mandatory training programs to equip all staff with the knowledge needed to use AI ethically. Reviewing and updating existing policies on data security and antidiscrimination to incorporate AI-specific concerns further solidifies a system’s preparedness.

Finally, leveraging the toolkit’s resources, such as customizable worksheets and sample forms, streamlines the creation of tailored governance frameworks. These steps collectively enable health systems to mitigate risks while tapping into AI’s benefits, whether by improving diagnostic tools or easing administrative workloads. Real-world examples, like early adopters refining protocols through pilot programs, demonstrate that such structured approaches can yield measurable improvements in efficiency and care quality.

Reflecting on a Path Forward

Looking back, the release of the AMA’s AI Governance Toolkit marked a pivotal moment for healthcare, addressing a critical gap at a time when technology outpaced preparedness. Health systems that embraced its guidance found a structured way to balance innovation with responsibility, ensuring patient trust remained intact amid rapid change.

As the journey continued, the focus shifted toward refining these governance structures through ongoing collaboration and adaptation. Health systems were encouraged to share insights from their implementations, fostering a collective learning environment that could address emerging challenges over time.

Beyond individual efforts, the broader industry faced the task of aligning with evolving regulatory landscapes and technological advancements. Committing to regular policy updates and investing in staff education became essential next steps, promising a future where AI served as a true partner in enhancing healthcare delivery without compromising its core values.