In an era where digital health tools are transforming patient care, apps like Flo.Health have gained immense popularity for helping individuals track menstrual cycles, ovulation, and reproductive health with ease, but beneath this convenience lies a troubling reality that healthcare providers must confront: the significant privacy risks associated with such platforms. Flo.Health, in particular, has come under intense scrutiny for sharing highly sensitive user data with third parties without adequate consent, sparking legal battles and ethical debates. This situation raises critical questions about the safety of digital recommendations in clinical practice. As providers increasingly integrate these tools into patient care plans, understanding the implications of data breaches and the responsibilities tied to endorsing apps becomes paramount. The unfolding story of Flo.Health serves as a stark reminder that technology, while empowering, can also jeopardize patient trust and confidentiality if not handled with rigorous oversight.

Uncovering Data Vulnerabilities in Digital Health Apps

The privacy concerns surrounding Flo.Health emerged as a major issue when it was discovered that the app had been transmitting deeply personal information, such as reproductive health details, to external entities like Meta, the parent company of Facebook, without obtaining proper user consent. This breach of trust is not an isolated incident but rather a reflection of a systemic vulnerability within the digital health industry. Many mobile health applications lack stringent safeguards, allowing sensitive data to be shared with data brokers or third parties who may exploit it for commercial gain. For healthcare providers, this revelation is alarming, as recommending such tools could inadvertently expose patients to risks beyond their control. The lack of transparency in data handling practices means that even well-intentioned suggestions can backfire, placing both patient privacy and professional credibility at stake in an environment where data protection often lags behind technological advancements.

Beyond the specific case of Flo.Health, the broader landscape of health apps reveals a troubling pattern of inadequate privacy protections that providers must navigate. The integration of tracking technologies, such as Meta Pixel, has enabled the covert collection of health-related data from users who may not even be active on social media platforms at the time. This kind of hidden data harvesting undermines the fundamental expectation of confidentiality in healthcare. For clinicians, the implications are profound: endorsing an app without fully understanding its data-sharing mechanisms could compromise patient safety. The challenge lies in balancing the benefits of digital tools, which can enhance patient engagement and self-monitoring, with the potential for unauthorized access to personal information. As these technologies become more embedded in care delivery, providers face the daunting task of staying informed about evolving risks to ensure that their recommendations do not inadvertently harm those they aim to help.

Legal Repercussions and Regulatory Oversight

Flo.Health’s privacy missteps led to significant legal consequences, beginning with a landmark settlement with the Federal Trade Commission (FTC) in June 2021. The agreement came after allegations that the app shared sensitive user data without explicit permission, compelling Flo.Health to implement stricter measures. These included obtaining clear consent before data sharing, conducting independent privacy audits, and notifying users impacted by previous disclosures. This legal action highlights a growing demand for accountability in the digital health sector, where lax oversight has often left users vulnerable. For healthcare providers, the settlement serves as a cautionary tale about the potential fallout from recommending apps that fail to prioritize data security. It also signals a shift toward stricter regulatory expectations, pushing developers to adopt transparent practices that align with patient rights and privacy standards.

Adding to the legal scrutiny, a California federal jury delivered a significant ruling in August of the current year, finding Meta liable under the California Invasion of Privacy Act for collecting data through Flo.Health’s software development kit (SDK) without proper authorization. This decision marks a pivotal moment, holding not only app developers but also their third-party partners accountable for privacy violations. The ruling sets a precedent that could influence future cases, emphasizing that all entities involved in data collection bear responsibility for safeguarding user information. For providers, this development underscores the complexity of digital health ecosystems, where multiple players may contribute to privacy risks. Staying abreast of such legal outcomes is crucial, as they shape the standards and expectations for apps used in clinical settings, ultimately affecting the trust patients place in recommended tools and the professionals who endorse them.

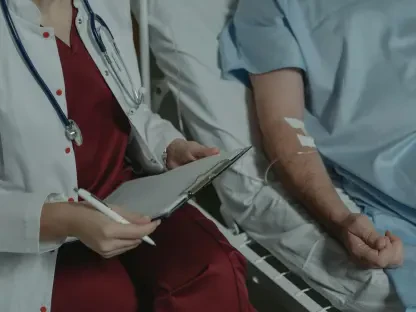

Ethical Obligations in Clinical Recommendations

Healthcare providers who suggest digital tools like Flo.Health are bound by a profound ethical duty to protect patient confidentiality, a principle enshrined in professional codes across disciplines such as medicine, nursing, and counseling. These guidelines stress the importance of maintaining privacy and ensuring informed consent, regardless of whether care is delivered in person or through digital means. Recommending an app is not a casual suggestion but an extension of clinical responsibility, requiring the same level of diligence as prescribing medication or treatment plans. The Flo.Health case illustrates how easily privacy can be compromised, placing providers in a position where they must critically evaluate the tools they advocate. Failure to do so risks violating the trust patients place in their caregivers, as well as the ethical standards that define professional practice in healthcare.

Navigating the ethical landscape becomes even more challenging when considering the potential impact of privacy breaches on patient-provider relationships. When providers recommend apps without fully understanding their data practices, they may unintentionally expose patients to risks that undermine confidence in both the technology and the clinician’s judgment. The Flo.Health incident serves as a stark example of how hidden data-sharing practices can fracture trust, making patients wary of sharing personal information or using digital tools altogether. Providers must therefore prioritize transparency, ensuring that patients are aware of potential privacy concerns before using recommended apps. By fostering open discussions about risks and benefits, clinicians can uphold their ethical obligations while empowering patients to make informed decisions about their health management in an increasingly digital world.

Impact on Patient Trust and Clinical Practice

The privacy breaches associated with Flo.Health have far-reaching consequences for patient trust in digital health tools, an issue that directly affects healthcare providers. When sensitive information is shared without consent, patients may question the safety of using such apps, leading to reluctance in adopting technologies that could otherwise support their care. This erosion of confidence extends beyond the app itself to the clinicians who recommended it, as patients may perceive a lack of due diligence on the provider’s part. In a field where trust is foundational to effective treatment, such incidents can create barriers to engagement, making it harder for providers to integrate digital solutions into care plans. The challenge lies in reassuring patients that their privacy is a priority, even as technology continues to play a larger role in healthcare delivery.

For providers who have previously endorsed Flo.Health, addressing past recommendations poses a unique set of challenges in maintaining clinical integrity. Transparency becomes key in these situations, as clinicians must acknowledge any newfound privacy risks associated with the app and offer guidance on protecting personal data. This might involve discussing alternative tools with stronger privacy protections or advising patients on steps to minimize data exposure, such as adjusting app settings. By taking a proactive stance, providers can demonstrate a commitment to patient welfare, helping to rebuild trust that may have been damaged by earlier advice. This approach not only mitigates the impact of past endorsements but also reinforces the importance of staying informed about the evolving landscape of digital health, ensuring that future recommendations align with the highest standards of care and confidentiality.

Practical Strategies for Mitigating Privacy Risks

To address the privacy concerns highlighted by the Flo.Health case, healthcare providers can adopt proactive measures to safeguard patient data when recommending digital tools. A critical first step is thoroughly vetting app privacy policies to ensure they meet stringent security standards before suggesting them to patients. Additionally, educating patients about potential data-sharing risks empowers them to make informed choices about using such tools. Encouraging privacy-focused practices, such as disabling unnecessary social media integrations or limiting data permissions, can further reduce exposure. Integrating discussions about digital privacy into routine care ensures that patients understand the implications of using health apps and feel supported in navigating these technologies. By prioritizing these steps, providers can minimize risks and reinforce their role as trusted advocates for patient safety in a digital age.

Beyond initial recommendations, providers must also consider ongoing support and communication as part of their strategy to address privacy risks. This involves staying updated on legal and regulatory developments related to health apps, as well as any new vulnerabilities that may emerge over time. When issues arise, such as those seen with Flo.Health, clinicians should be prepared to engage with patients transparently, offering resources or alternatives to mitigate concerns. Creating a culture of openness around digital health tools can help maintain trust, even in the face of breaches. Providers might also collaborate with professional organizations to access updated guidelines or training on digital privacy, ensuring their practices remain aligned with best standards. These efforts collectively strengthen the ability of clinicians to navigate the complex intersection of technology and patient care, fostering an environment where digital tools enhance rather than endanger health outcomes.

Moving Forward with Informed Caution

Reflecting on the Flo.Health saga, it has become evident that unchecked data-sharing practices exposed critical flaws in digital health privacy, prompting decisive actions from regulators like the FTC and California courts. These interventions, which held both app developers and third-party entities accountable, marked a turning point in addressing systemic vulnerabilities. Providers who had recommended the app faced ethical dilemmas, navigating how to reconcile past advice with newly uncovered risks while upholding patient trust. The emphasis on transparency and informed consent, as mandated by professional codes, emerged as a guiding principle in those efforts. Looking ahead, clinicians must commit to rigorous vetting of digital tools, prioritize patient education on privacy risks, and advocate for stronger industry standards. By fostering collaboration between healthcare professionals, regulators, and app developers, the path forward can focus on building secure, trustworthy digital health solutions that truly serve patient needs.