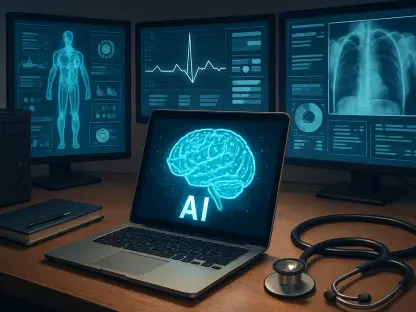

In an era where mental health challenges are on the rise and access to professional care remains out of reach for millions, artificial intelligence (AI) mental health chatbots have stepped into the spotlight as a potential game-changer. These digital tools, available at any hour, provide coping mechanisms, mindfulness guidance, and a compassionate ear to those grappling with emotional struggles. Yet, a heated debate has surfaced about whether these innovative apps should fall under the strict oversight of the Food and Drug Administration (FDA) as medical devices. Recently, discussions at an FDA Digital Health Advisory Committee meeting have brought this issue to the forefront, pitting the need for safety against the drive for accessible solutions. As this conversation unfolds, it’s clear that the stakes are high—not just for developers, but for the countless individuals relying on these tools for support. The question remains: where should the line be drawn between innovation and regulation?

Defining the Nature of AI Chatbots

The heart of the debate lies in understanding what AI mental health chatbots truly are. Unlike traditional medical devices, which are explicitly engineered to diagnose, treat, or prevent specific conditions, these chatbots are crafted as wellness tools. They offer general support through stress-relief techniques, guided reflections, and cognitive behavioral reframing, without claiming to address particular disorders. Proponents argue that this fundamental difference sets them apart from regulated products like prescription apps, which target diagnosed conditions with clinical intent. Labeling chatbots as medical devices, therefore, feels like a mismatch—a policy overreach that could misrepresent their purpose. This distinction is crucial, as it shapes how society approaches their role in mental health care and whether stringent oversight aligns with their actual function.

Moreover, the design of these chatbots prioritizes accessibility over clinical intervention. They’re built to be companions for emotional well-being, not substitutes for licensed therapists or medical equipment. Consider how they operate: users engage in conversations that mimic supportive dialogue, often finding solace in curated responses grounded in psychological principles. Yet, there’s no assertion of curing ailments or providing definitive diagnoses. This positions them closer to self-help resources than to regulated health technologies. Applying FDA standards meant for devices with direct medical claims could burden these tools with irrelevant expectations, potentially stifling their growth in a field desperate for affordable options. The nuance here fuels a broader question of whether regulation should adapt to the unique nature of digital wellness innovations.

Financial Burdens and Accessibility Risks

Diving into the practical implications, regulating AI chatbots as medical devices introduces a steep financial hurdle. The FDA registration process demands a yearly fee exceeding $11,000, alongside significant costs for premarket documentation, risk assessments, and ongoing compliance reports. For many developers, especially smaller startups driving innovation in this space, such expenses could be prohibitive. The ripple effect is troubling: fewer players in the market might mean reduced competition, leading to higher prices for end users. At a time when mental health care access is already strained, adding these barriers risks pushing vital support further out of reach for those who need it most. This economic reality sharpens the argument against heavy regulation.

Beyond the numbers, there’s a human cost to consider. Many individuals turn to AI chatbots precisely because traditional therapy—often expensive or geographically inaccessible—isn’t an option. These tools provide an affordable alternative, sometimes costing little to nothing for basic features. If regulatory costs force developers to scale back or shut down, the gap in mental health support could widen dramatically. Imagine a single parent juggling work and family, finding solace in a late-night chat with an app, only to lose that lifeline due to regulatory overreach. The potential loss of such resources isn’t just a business concern; it’s a societal one, touching on the urgent need to prioritize access over bureaucratic constraints. This tension between cost and care remains a pivotal point in the debate.

Tangible Benefits in Emotional Support

Despite their non-clinical status, AI mental health chatbots have demonstrated meaningful impact for countless users. Studies and user feedback reveal significant reductions in feelings of isolation, with many reporting lighter burdens of anxiety or mild depression after engaging with these tools. They serve as a digital bridge, connecting individuals to immediate emotional support when traditional therapy isn’t feasible due to cost, stigma, or availability. This isn’t about replacing professional care but about supplementing it with a low-barrier, always-on resource. Framing these outcomes as general wellness gains rather than medical treatments underscores why many stakeholders resist FDA oversight that could mischaracterize their purpose.

Furthermore, the value of these chatbots lies in their ability to democratize mental health support. They cater to diverse populations—think rural residents far from therapists or young adults hesitant to seek formal help. By offering a judgment-free space to vent or reflect, they fill a unique niche that formal systems often overlook. Unlike medical devices targeting specific diagnoses, their strength is in broad, empathetic engagement, tailored to individual emotional needs through adaptive algorithms. This personalized yet non-diagnostic approach challenges the notion that they require the same scrutiny as clinical tools. As such, their role as a complementary asset in the mental health landscape suggests a need for regulatory frameworks that acknowledge their distinct benefits without imposing undue restrictions.

Addressing Safety Through Self-Regulation

A common concern among critics is the potential for AI chatbots to mishandle sensitive situations, perhaps offering misguided advice during a crisis. This worry isn’t unfounded—mental health interactions demand care and precision. However, the industry isn’t sitting idly by. Many companies have already embedded safeguards, such as partnerships with mental health professionals to refine responses and integrations with crisis hotlines to redirect users in distress. These proactive steps reflect a commitment to safety that doesn’t hinge on FDA mandates. The question then arises: if developers are taking responsibility, is external oversight truly necessary, or could it simply add red tape without enhancing user protection?

In addition, the self-regulatory measures adopted by chatbot creators often draw on evidence-based practices, ensuring interactions remain supportive and grounded. Specialized apps in this space collaborate with experts to design frameworks that prioritize user well-being, from recognizing signs of severe distress to offering actionable guidance toward professional help. This internal accountability contrasts with the one-size-fits-all approach of medical device regulation, which might not suit the fluid, conversational nature of AI tools. The industry’s efforts suggest a viable middle ground—maintaining safety through voluntary standards while avoiding the constraints of formal oversight. This balance could preserve innovation while addressing public concerns, challenging the push for stricter controls.

Contextualizing the Mental Health Crisis

Zooming out to the broader landscape, the debate over chatbot regulation cannot ignore the stark reality of a mental health care crisis in the U.S. Millions lack access to therapists due to financial barriers, long waitlists, or geographic isolation. In this context, AI chatbots aren’t just nice-to-have tools; they’re a critical lifeline, offering round-the-clock support to those who might otherwise go without. Their ability to provide immediate interaction—no appointment needed—meets a pressing societal need. If stringent regulations limit their availability, the fallout could disproportionately harm vulnerable populations already struggling to find help, amplifying an already dire situation.

Moreover, the crisis context highlights why regulatory balance is so vital. Mental health challenges don’t adhere to business hours or wait for budget approvals, yet traditional systems often do. Chatbots step into this gap with scalability that brick-and-mortar care can’t match, reaching users across diverse circumstances. Consider a teenager in a remote area, unable to travel for therapy, finding comfort in a digital conversation. Curtailing such tools through overregulation risks exacerbating inequities in care access, a consequence that weighs heavily against the push for FDA control. This societal backdrop frames the conversation not just as a policy debate, but as a moral imperative to protect accessible solutions in times of widespread need.

Voices from the Field and Future Paths

Turning to the perspectives of those directly involved, developers and advocates largely stand united in viewing AI chatbots as wellness aids, not medical devices. Public statements to the FDA emphasize a shared mission: enhancing emotional health without crossing into clinical territory. There’s palpable concern that overregulation could stall progress in digital health, especially when companies are already embedding safety through expert collaboration and scientific grounding. This consensus challenges the assumption that government intervention is the sole path to user protection, instead pointing to a collaborative model where innovation and responsibility coexist. The industry’s voice adds a pragmatic layer to the discussion, urging policies that reflect the tools’ intended scope.

Looking ahead, the path forward demands nuanced solutions that avoid blanket mandates. Policymakers might consider tiered frameworks—lighter oversight for wellness-focused apps versus stricter rules for those making medical claims. Such an approach could safeguard users without choking off development in a field poised to address systemic care shortages. Meanwhile, continued dialogue between the FDA, developers, and mental health experts could refine safety standards organically. Ultimately, the goal should be fostering tools that remain accessible and effective for those in need, ensuring that yesterday’s debates paved the way for thoughtful, balanced progress in digital health support.