The promise of artificial intelligence to revolutionize healthcare by delivering faster, more accurate diagnoses and treatments is being met with a troubling reality: a sharp and significant increase in reports of patient harm and device malfunctions. As AI-enabled medical devices proliferate, a critical tension has emerged between the rapid pace of innovation and the capacity of regulatory systems to ensure these sophisticated tools are safe for the very patients they are designed to help. This growing chasm raises urgent questions about whether the rush to integrate AI into medicine is inadvertently creating a new frontier of risk.

The Surge in AI-Related Medical Incidents

The integration of artificial intelligence into established medical technologies has, in some cases, corresponded with an alarming rise in adverse event reports, raising significant questions about the real-world performance and safety of these enhanced systems. This pattern suggests that while AI can add powerful capabilities, it may also introduce new and unforeseen failure modes that are not being adequately addressed before the technology reaches patients. The data points to a systemic issue where the complexity of AI algorithms may be creating a gap between predicted performance in controlled settings and actual outcomes in clinical practice, a discrepancy that regulators and manufacturers are now struggling to understand and mitigate. This trend underscores the necessity for a more rigorous post-market surveillance framework capable of detecting and analyzing AI-specific malfunctions before they result in widespread patient harm.

A Case Study in Unforeseen Complications

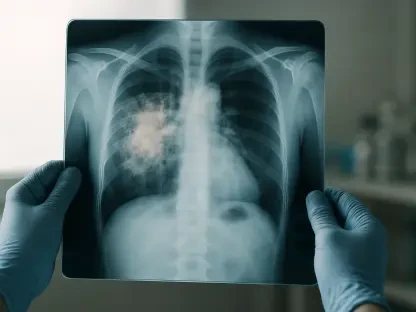

A compelling illustration of this emerging challenge is the TruDi Navigation System, a device used in sinus surgery developed by Acclarent. Before the integration of AI software, the device had a relatively quiet history, with records showing seven unconfirmed malfunctions and a single patient injury over a three-year period. This baseline suggests a stable and predictable performance profile for the original technology. The established reliability of the non-AI version makes the subsequent developments particularly stark, serving as a critical benchmark against which the impact of the AI enhancement can be measured. This history highlights how a seemingly minor software update, intended to improve functionality, can fundamentally alter a device’s risk profile in ways that may not be immediately apparent during the initial approval process, pointing to a potential blind spot in current regulatory evaluations.

In stark contrast, following the system’s AI enhancement, the U.S. Food and Drug Administration (FDA) began receiving a wave of unconfirmed incident reports, totaling at least 100. This dramatic increase signals a significant shift in the device’s performance post-upgrade. Integra LifeSciences, which acquired Acclarent from Johnson & Johnson, has acknowledged that these reports were filed after the system’s use but maintains that there is no compelling evidence directly linking its AI technology to patient injuries. This position highlights a central dilemma in the age of medical AI: a correlation between AI integration and adverse events does not definitively prove causation. However, the sheer volume of reports for a single device creates a compelling case for deeper investigation and raises broader concerns about whether the current regulatory framework is equipped to handle the unique complexities and potential unpredictabilities of AI-driven medical tools.

A Widespread and Growing Concern

The issues observed with the TruDi system are not an isolated anomaly but rather symptomatic of a broader, industry-wide trend. Data reveals a significant and sustained increase in safety concerns across the spectrum of AI-based medical devices. Since 2021, the FDA has received at least 1,401 reports detailing adverse events linked to these technologies, a figure that has grown in lockstep with the rapid expansion of the market. The number of AI-enabled medical devices approved for use has nearly doubled since 2022, now standing at over 1,357. This explosive growth indicates that the technology is being commercialized at a rate that may be outpacing the development of comprehensive safety protocols and long-term performance monitoring. The escalating number of incident reports suggests that the challenges of ensuring AI safety are not confined to a single device or manufacturer but represent a systemic issue facing the entire medical technology sector.

The breadth of the problem is further illustrated by malfunctions occurring in diverse medical applications, from prenatal care to cardiology. For instance, the Sonio Detect, a prenatal ultrasound system, was reported to have incorrectly labeled and mapped critical fetal anatomical structures, a type of error that could have profound diagnostic consequences. Similarly, AI-powered cardiac monitors from Medtronic have reportedly failed to recognize abnormal heart rhythms, a critical function for patients at risk of serious cardiac events. While Medtronic has noted that some of these issues could be attributed to user error and has asserted that no patient harm resulted from confirmed device failures, these incidents collectively underscore the vulnerability of AI systems. They reveal that even in non-surgical applications, AI failures can introduce significant risks, from misdiagnosis to delayed treatment, highlighting the urgent need for industry-wide standards for algorithm validation and performance monitoring.

Navigating the Regulatory Maze

The rapid commercialization of artificial intelligence within the medical field is presenting an unprecedented challenge to regulatory bodies, creating a scenario where technological advancement is significantly outpacing the development of effective oversight. The current frameworks, largely designed for traditional hardware and software, are ill-equipped to manage the unique nature of AI, particularly its ability to learn and evolve after deployment. This mismatch creates a potential risk where devices are brought to market without a full understanding of their real-world performance characteristics and potential failure modes. The U.S. Food and Drug Administration, the primary body responsible for ensuring medical device safety, is finding its resources and methodologies stretched thin as it attempts to keep pace with an ever-expanding pipeline of complex, algorithm-driven products that defy conventional validation techniques.

The FDA’s Uphill Battle

The U.S. Food and Drug Administration is currently grappling with significant internal challenges that hinder its ability to effectively regulate the burgeoning field of medical AI. Reports indicate that the agency is facing a critical loss of experienced staff, which depletes its institutional knowledge and expertise at the very moment it is most needed. This brain drain is compounded by a lack of sufficient resources, which restricts the agency’s capacity to hire new talent with specialized skills in machine learning and data science. As a result, the FDA is in a constant race to catch up with a rapidly advancing private sector that operates with far greater agility and financial backing. This resource and personnel gap creates a dangerous imbalance, making it increasingly difficult for the agency to conduct thorough pre-market reviews and robust post-market surveillance of the hundreds of AI-enabled devices entering the healthcare ecosystem each year.

The inherent complexity of AI systems adds another layer of difficulty to the FDA’s mission, as these technologies often function as “black boxes,” where even their developers cannot fully explain the reasoning behind a specific output. This lack of transparency makes it incredibly challenging to validate an algorithm’s safety and effectiveness or to diagnose the root cause of a malfunction when it occurs. Traditional regulatory pathways are not designed to assess a device that can change its performance based on new data it encounters after being deployed. The agency is therefore tasked with creating entirely new evaluation paradigms capable of overseeing these “learning” systems throughout their entire lifecycle. Without such frameworks, the FDA is at risk of approving devices whose long-term behavior is unpredictable, potentially allowing unsafe or biased algorithms to proliferate in clinical settings, where they could impact the health outcomes of millions of patients.

A Path Forward for Safer Innovation

The consensus among industry experts, clinicians, and ethicists was clear: while artificial intelligence held immense potential to transform healthcare for the better, its safe and ethical implementation demanded a more robust and adaptive regulatory framework. The existing challenges highlighted a critical need to move beyond traditional device approval processes. The path forward involved a multi-faceted approach that balanced the drive for innovation with an unwavering commitment to patient protection. This required the development of transparent regulations specifically tailored to the unique characteristics of AI, including its capacity for continuous learning and its potential for algorithmic bias. The goal was to create a system that could foster technological advancement while establishing clear guardrails to prevent harm, ensuring that the benefits of medical AI could be realized equitably and safely.

To achieve this balance, a collaborative effort between regulatory bodies, technology developers, and healthcare providers was deemed essential. Key components of this proposed framework included the creation of systematic data collection on device performance in real-world clinical settings, moving beyond the limited data of pre-market trials. Clear standards for risk assessment, specifically addressing AI-related failure modes, were also identified as a priority. Furthermore, establishing rigorous benchmarks for data quality and model validation was seen as crucial to preventing the deployment of flawed or biased algorithms. Ultimately, governments and regulatory agencies had already begun the difficult work of developing these new approaches, signaling a recognition that the future of medicine depended on their ability to ensure that the powerful tools of AI were deployed not just effectively, but with the utmost care for patient well-being.