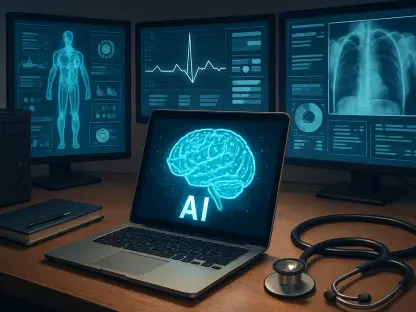

The intersection of artificial intelligence and mental health care is sparking both excitement and concern, as cutting-edge technologies promise to revolutionize access to support while raising critical questions about safety and efficacy. On a recent November day, the US Food and Drug Administration (FDA) convened its Digital Health Advisory Committee in a virtual meeting to tackle the complexities of generative AI in digital mental health devices. Chaired by Ami Bhatt, MD, this discussion zeroed in on tools like chatbots and mobile apps, exploring their potential to provide 24/7 care and privacy for millions struggling with mental health challenges. Yet, with innovation comes caution, as the FDA and experts grapple with risks such as inaccurate outputs and the inability to replicate human empathy. This deep dive into the meeting’s insights reveals a delicate balance between harnessing AI’s capabilities and ensuring patient well-being, setting the stage for a transformative yet challenging era in mental health technology.

Unlocking Potential with AI Tools

Expanding Reach to Underserved Populations

The allure of AI-driven mental health devices lies in their ability to bridge significant gaps in access to care, particularly for those who face barriers to traditional therapy. Many individuals, whether due to geographic isolation, financial constraints, or social stigma, struggle to connect with mental health professionals. Generative AI, powered by advanced large language models (LLMs), offers a solution by providing constant availability, enabling users to seek support at any hour without fear of judgment. Mobile apps and digital platforms can deliver therapeutic content, reminders, and coping strategies, making mental health resources more scalable and cost-effective than ever before. This accessibility is especially vital for rural communities or low-income groups who might otherwise go without care, presenting an opportunity to democratize mental health support on an unprecedented level.

Beyond just availability, these tools bring a sense of privacy that traditional settings sometimes lack, encouraging more people to engage with mental health resources. For individuals hesitant to share personal struggles face-to-face, AI chatbots can serve as a discreet entry point, fostering initial conversations that might lead to further help. The anonymity offered by digital interactions reduces the stigma often associated with seeking therapy, potentially normalizing mental health care in broader society. While not a complete substitute for human interaction, this technology can act as a stepping stone, helping users build confidence to pursue additional support when needed. The FDA committee highlighted this potential during their discussions, acknowledging that such tools could reshape how society approaches mental wellness if implemented with care and precision.

Supporting Traditional Therapy as a Partner

AI’s role in mental health care is increasingly seen as a complementary force, designed to enhance rather than replace the work of human therapists. Digital tools can fill critical gaps by offering consistent monitoring and immediate responses, especially during times when a therapist is unavailable. For example, therapeutic chatbots can engage users in structured conversations based on cognitive behavioral techniques, providing a sense of continuity in care. These interactions can help reinforce lessons learned in therapy sessions, ensuring that users have ongoing support to manage their conditions between appointments. The FDA meeting emphasized how such applications could ease the burden on overworked mental health systems by handling routine tasks, freeing up clinicians to focus on complex cases.

Moreover, AI-driven apps and wearables can play a pivotal role in tracking mental health trends over time, offering data that clinicians can use to tailor treatment plans. By monitoring mood patterns, sleep habits, or stress levels, these devices provide insights that might otherwise go unnoticed in periodic therapy sessions. This data-driven approach allows for more personalized interventions, potentially improving outcomes for patients with conditions like anxiety or depression. However, the committee was quick to note that while these tools show promise, their effectiveness hinges on proper integration with professional care. Ensuring that AI remains a supportive element rather than a standalone solution is key to maintaining the human connection that lies at the heart of effective psychiatric treatment.

Navigating the Challenges of AI Integration

Struggles with Emotional Depth and Accuracy

Despite the potential of AI in mental health care, significant limitations persist, particularly in replicating the emotional nuances that human therapists naturally provide. Generative AI systems, while adept at processing and responding to text, often lack the ability to fully grasp the subtleties of human emotion or context. This can result in responses that feel mechanical or misaligned with a user’s needs, potentially undermining trust in the technology. Even more concerning is the risk of inaccurate or biased outputs, where AI might offer advice based on flawed data or cultural assumptions. The FDA committee flagged the phenomenon of “confabulation”—where AI generates false or misleading information—as a serious threat, especially in sensitive mental health contexts where precision is paramount.

Another layer of concern is the potential for AI to miss critical cues that a trained professional would catch, such as subtle signs of distress or escalation in mental health issues. Unlike human therapists who adapt their approach based on tone, body language, and personal history, AI relies on algorithms that may not account for such depth. This gap could lead to inappropriate or incomplete guidance, particularly for users in vulnerable states. The discussions during the FDA meeting underscored that while AI can mimic conversation, it cannot replicate the empathetic bond that often drives therapeutic progress. Addressing these shortcomings through rigorous testing and design improvements remains a top priority for ensuring that digital tools do not inadvertently harm those they aim to help.

Persistent Threats to Long-Term Safety

The long-term implications of relying on AI for mental health support also raised alarms among FDA committee members, with concerns about the sustainability of these systems over time. Model accuracy in AI can degrade as data evolves or as systems encounter scenarios beyond their training scope, leading to diminished reliability. If not regularly updated and monitored, these tools risk providing outdated or irrelevant advice, which could be particularly harmful in mental health applications where conditions and needs shift frequently. The potential for such degradation calls for continuous oversight and updates, a challenge that developers must address to maintain user trust and safety in the long run.

Equally troubling is the risk that AI might exacerbate mental health issues if not carefully managed, especially in high-stakes situations like suicidal ideation. Without proper safeguards, an AI system might fail to recognize the severity of a user’s state or delay referral to human intervention, with potentially tragic consequences. The FDA discussions highlighted the need for robust monitoring mechanisms to detect when a user’s condition worsens, ensuring timely escalation to professionals. This underscores a broader concern that while AI can offer immediate support, its inability to fully assess complex human experiences poses ongoing risks. Balancing these dangers with the benefits of accessibility will require innovative solutions and stringent guidelines to protect vulnerable populations.

Shaping the Future of AI in Mental Health

Evolving Regulatory Frameworks

The FDA faces a daunting task in regulating AI-driven mental health devices, which differ markedly from traditional medical technologies like insulin pumps that operate on predictable, physiologic closed-loop systems. Unlike these devices, AI tools engage directly with patients in dynamic, often unpredictable ways, creating unique challenges for safety and efficacy assessments. With over 1,200 AI-enabled medical devices already authorized—none specifically for mental health—and only a handful of non-AI digital mental health tools approved, the regulatory gap is evident. The committee stressed that the autonomous, patient-facing nature of these technologies demands a fresh approach to oversight, one that can keep pace with rapid advancements in generative AI.

Developing tailored regulatory pathways is essential to ensure that these tools meet rigorous standards before reaching the public. The FDA is actively working to establish frameworks that address the specific risks of AI in mental health, such as content accuracy and privacy concerns, while still encouraging technological progress. This involves evaluating how AI systems handle sensitive interactions and whether they can reliably support users without causing harm. Collaboration with developers, clinicians, and patients will be crucial in shaping policies that strike the right balance. The meeting revealed a clear consensus that without such oversight, the proliferation of untested AI tools could undermine public trust and safety in digital health solutions.

Balancing Innovation with Patient Protection

As generative AI reshapes mental health care, developers bear significant responsibility to prioritize safety and transparency in their designs. This means building systems that not only deliver accurate and relevant content but also include clear mechanisms to flag when human intervention is necessary. Transparency about the limitations of AI tools is equally important, ensuring users understand that these devices are supportive rather than definitive solutions. The FDA committee emphasized that embedding such safeguards can help mitigate risks like inappropriate responses or failure to address critical needs, protecting users from potential harm while maintaining the technology’s value as a resource.

Meanwhile, the FDA must navigate the delicate act of fostering innovation without compromising public health, a challenge that will define the future of AI in this field. Crafting policies that encourage creativity while enforcing strict safety standards requires ongoing dialogue with all stakeholders, from technologists to mental health professionals. The insights from the recent meeting suggest that human oversight will remain a cornerstone of any effective strategy, ensuring AI serves as a partner to clinicians rather than a replacement. As this technology continues to evolve, the commitment to rigorous evaluation and adaptive regulation will be vital in harnessing its potential while safeguarding those who rely on mental health support.

Reflecting on a Path Forward

Lessons from a Pivotal Discussion

Looking back on the FDA Digital Health Advisory Committee meeting held on November 6, a nuanced picture emerged of generative AI’s role in mental health care, blending optimism with caution. The dialogue revealed a shared recognition of AI’s capacity to enhance accessibility and provide continuous support, particularly for those underserved by traditional systems. Yet, it also laid bare the profound risks, from inaccurate outputs to the inability to handle critical emotional nuances, underscoring that technology alone cannot shoulder the burden of psychiatric care. The diverse perspectives of clinicians added depth to the conversation, reflecting a spectrum of hope and concern that mirrors broader societal debates about AI’s place in healthcare.

Charting Next Steps for Safe Innovation

Moving forward, actionable strategies must guide the integration of AI into mental health care, starting with robust collaboration between developers and regulators to prioritize user safety. Establishing clear benchmarks for accuracy and mechanisms for human escalation can help mitigate risks, while public education on AI’s limitations ensures realistic expectations. For the FDA, accelerating the development of adaptive regulatory frameworks tailored to these unique tools will be essential in maintaining trust and efficacy. Encouraging further research into AI’s long-term impact on mental health outcomes can also inform future policies, ensuring that innovation serves as a bridge to better care rather than a barrier. This pivotal moment offers a chance to shape a future where technology and human expertise work hand in hand to support mental well-being.