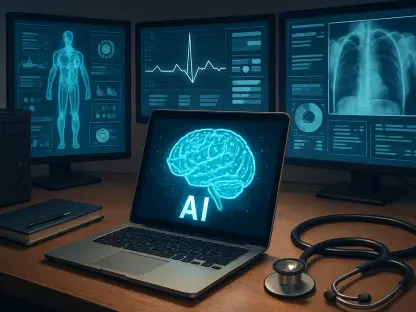

An unprecedented digital migration is underway in personal health management, with generative artificial intelligence tools like ChatGPT now fielding over 40 million healthcare-related questions every single day. This staggering volume, which accounts for more than 5% of the platform’s global traffic, reveals a profound shift in how individuals seek medical information. The queries span a vast spectrum of needs, from preliminary symptom checks and the interpretation of complex medical terminology to navigating the labyrinthine world of health insurance, a category that alone generates up to two million questions weekly. This massive reliance on AI for health guidance points to a burgeoning ecosystem where algorithms are becoming the first point of contact for millions, raising critical questions about accuracy, safety, and the future of the patient-provider relationship in an increasingly automated world.

An Unregulated Digital First Responder

A deeper analysis of this trend reveals that the turn toward AI is often a matter of necessity rather than choice, driven by significant gaps in traditional healthcare accessibility. Approximately 70% of these health-related interactions occur outside of typical clinic hours, a time when professional medical advice is largely unavailable. In these moments, AI serves as an always-on resource for individuals facing immediate health concerns. It becomes a de facto first responder, offering information and potential context when doctors’ offices are closed and emergency rooms seem like an excessive step. This round-the-clock availability has positioned generative AI as an indispensable tool for people seeking to understand their symptoms, prepare for future appointments, or simply find reassurance in the middle of the night, fundamentally altering the initial stages of the patient journey for a significant portion of the population.

The pattern of AI adoption also paints a clear picture of its role in addressing systemic healthcare disparities across the country. Usage rates are disproportionately high in sparsely populated states such as Wyoming and in regions identified as “hospital deserts,” where residents have limited or no local access to medical facilities. For these underserved communities, AI is not merely a convenience but a critical bridge to information that would otherwise be out of reach. While an algorithm cannot replace a shuttered clinic or a retiring physician, it serves a vital near-term function. It empowers individuals to better interpret health information, formulate questions for the rare medical appointments they can secure, and navigate a healthcare system that is often confusing and difficult to access, thereby filling a void left by long-standing infrastructural deficiencies in the nation’s healthcare landscape.

The Widening Chasm Between Patient Trust and Clinical Reality

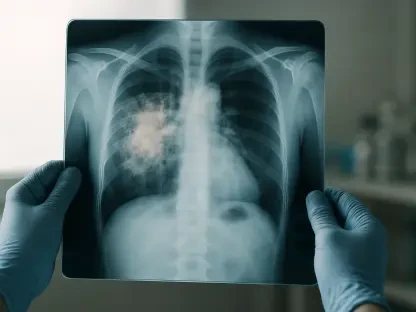

This widespread consumer adoption has, however, created a significant and potentially dangerous disconnect with the established clinical perspective. While a majority of U.S. adults now report using AI for medical questions, this behavior is fraught with risk. A concerning finding indicates that nearly one-third of Americans would delay or completely avoid seeing a doctor if an AI tool suggested their symptoms were low-risk. This highlights a perilous level of trust in unvalidated technology, where an algorithm’s assessment could lead to missed diagnoses and worsened health outcomes. Furthermore, this overconfidence is bolstered by the user experience, as roughly half of the individuals who used ChatGPT to check symptoms felt the tool had effectively “led to a diagnosis.” This perception underscores a fundamental misunderstanding of AI’s capabilities, treating probabilistic text generation as a substitute for professional medical expertise.

The rapid integration of artificial intelligence is not limited to patients; healthcare providers themselves are embracing the technology at an accelerated pace. Within the last year, the percentage of U.S. physicians using AI for work-related tasks surged dramatically, climbing from 38% to two-thirds of all clinicians. For these professionals, AI is not a diagnostic tool but a powerful assistant aimed at alleviating the crushing administrative burdens that contribute to widespread burnout. They leverage it to streamline documentation, summarize patient histories, and manage communications, which in turn frees up valuable time to focus on delivering more personalized and attentive patient care. This dual adoption paints a complex picture of AI’s role: a resource for clinicians to enhance care delivery, yet simultaneously a tool for patients that, when misused for self-diagnosis, poses a direct threat to their safety and well-being.

Navigating an Uncharted Digital Frontier

The convergence of these trends created a healthcare landscape defined by both unprecedented opportunity and significant peril. The rapid, unregulated adoption of AI by both patients and providers established a new paradigm where digital tools filled critical gaps in access while simultaneously introducing risks related to misinformation and diagnostic overconfidence. The challenge for the healthcare community became one of harnessing the efficiency and accessibility of AI without compromising the foundational principles of patient safety and evidence-based medicine. This required a multi-faceted response that involved developing clinical validation standards for health-related AI, educating the public on the technology’s limitations, and integrating verified tools into clinical workflows in a way that augmented, rather than replaced, the indispensable judgment of human healthcare professionals.