An algorithm, operating silently within a major health insurance system, has just determined that a patient’s post-surgery rehabilitation is no longer medically necessary, despite the treating physician’s strong objections. This scenario is no longer hypothetical; it is an increasingly common reality as artificial intelligence is integrated into healthcare to manage costs within value-based care and Medicare Advantage plans. These sophisticated tools are introduced with the promise of operational efficiency and data-driven precision, yet they often function as opaque “black boxes” that prioritize financial targets over the complex, individual needs of patients. This push for automation has ignited a critical debate across the healthcare industry, forcing providers, payers, and patients to confront a fundamental question: as technology takes a greater role in life-or-death decisions, are we engineering a system that truly puts the patient first, or are we inadvertently creating one that values profit above all else?

The Fundamental Conflict Between Averages and Individuals

The primary driver behind the adoption of AI in healthcare coverage is the compelling financial incentive to reduce spending. In an effort to control costs, insurers and accountable care organizations deploy predictive algorithms to forecast what an “average” patient should require for a given condition, calculating an optimal number of rehabilitation days or a standard quantity of home therapy visits. This data-centric model is frequently positioned as a move toward personalized medicine, but in practice, it often forces unique and complex human health issues into a rigid, one-size-fits-all framework. The algorithms are programmed to optimize for a statistical mean, a concept that stands in stark contrast to the reality of clinical practice, where illnesses, recovery trajectories, and unforeseen complications are deeply personal and rarely conform to a neat bell curve. This creates a dangerous and unavoidable clash where the system’s mandate for cost containment directly undermines a patient’s legitimate and often urgent medical needs.

For clinicians on the front lines, these AI systems can feel less like a sophisticated tool and more like a blunt instrument that automates the denial of necessary care. A poignant example involved a cancer survivor with complications whose post-acute care was slated for premature termination by an algorithm designed to minimize costs. Only through the determined intervention of human care coordinators, who advocated for extended services based on their direct knowledge of the patient’s fragile condition, was a costly and dangerous hospital readmission averted. This case highlights a critical flaw in the system: without a human advocate possessing the authority to override the algorithm, countless patients are left vulnerable. They face denials for an extra week of home nursing or a specific piece of medical equipment simply because their situation deviates from the predefined, cost-effective model, exposing them to significant health risks.

The Pervasive Danger of the Black Box

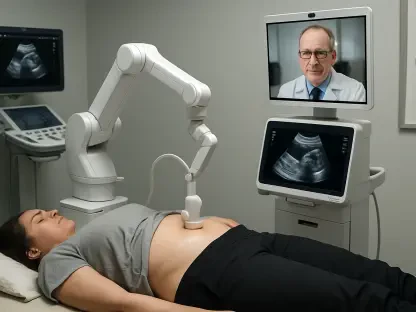

Among the most damaging aspects of AI-driven coverage decisions is the profound and systemic lack of transparency. When an algorithm rejects a claim for care, patients and their families are typically left entirely in the dark, receiving generic denial letters filled with boilerplate phrases such as “not medically necessary.” These communications offer no specific details about the data used, the logic applied, or how the conclusion was reached, making it nearly impossible for a patient to understand, question, or effectively appeal the decision. This “black box” nature of the process is deeply disempowering, stripping individuals of their agency at a time when they are most vulnerable. The secrecy surrounding the algorithm’s internal workings means that even when a denial ignores well-documented medical conditions that make a planned discharge unsafe, there is no clear path for recourse or accountability.

This pervasive opacity extends far beyond the individual patient, creating destabilizing ripple effects throughout the entire care ecosystem. Hospitals and skilled nursing facilities are unable to effectively plan patient transitions when coverage can be abruptly terminated based on hidden algorithmic criteria. The sudden cutoffs lead to immense friction between providers and payers, with discharge planners being blindsided and care teams finding themselves in stark disagreement with automated decisions to end coverage for patients they know are not clinically ready. The ultimate consequences of this secrecy and lack of coordination are hurried and unsafe discharges, fragmented care, and a higher risk of complications and hospital readmissions—the very outcomes that value-based care and predictive analytics were ostensibly designed to prevent, revealing a deep and dangerous paradox at the heart of the system.

Establishing a Framework for Ethical Implementation

To realign the trajectory of AI in healthcare toward a patient-first model, organizations must commit to instilling fairness through rigorous and proactive oversight. While managing costs remains a valid objective, it cannot supersede the primary mission of providing necessary and equitable care. This requires healthcare systems and algorithm developers to meticulously audit these powerful tools for bias before they are ever deployed at scale. Such audits must involve a systematic examination of outcomes across diverse demographic groups—including race, gender, and socioeconomic status as indicated by zip code—to identify and rectify any disparities or errors the algorithms may perpetuate or amplify. The core of this solution is a fundamental shift in philosophy from reactive damage control, which addresses harms after they occur, to a system of proactive governance that builds ethics and fairness into the technology from its inception.

Transparency became recognized not as an optional feature but as an absolute necessity for safe and ethical care. It was understood that while insurers did not need to publish their proprietary formulas, they had a responsibility to disclose the specific criteria, factors, and clinical evidence an algorithm used to approve or deny services. This shift empowered patients and providers to finally understand the basis of a decision. Furthermore, it was established that the predictions of an algorithm, such as the expected duration of covered post-acute care, had to be shared openly with hospitals, skilled nursing facilities, and care teams. This open communication allowed for collaborative and proactive discharge planning, enabling providers to flag concerns early if an algorithm’s prediction starkly contrasted with a patient’s clinical reality. Ultimately, it was the reaffirmation of human judgment that proved most critical. The realization solidified that AI algorithms were merely tools, designed by humans and programmed to reflect specific, often financial, priorities. They inherently lacked a complete, holistic understanding of a patient’s unique situation. Therefore, healthcare leaders ensured that the final decision to provide or deny care rested with humans, who possessed the situational knowledge, clinical expertise, and nuanced understanding that technology could not replicate.