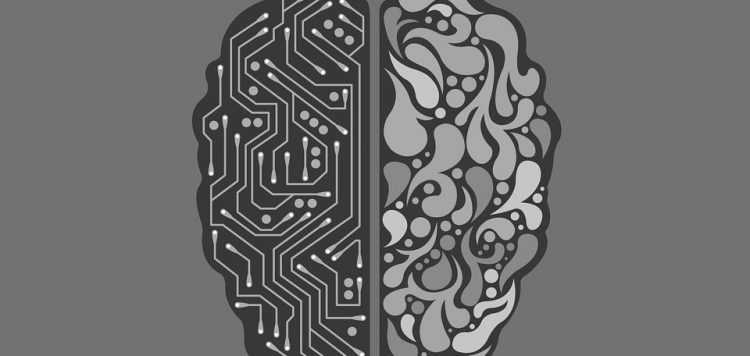

The human brain is known as ‘nature’s most sophisticated computer’. Yet, it is still susceptible to error, damage, and disease. Given the advanced technologies that keep developing, there are various questions inevitably raised: What if we could build a synthetic brain with all the intelligent human features, but without the imperfections? And if we did, how will this affect the safety of future generations?

Building the perfect brain

There are billions of neurons and neuronal connections in the human brain. This huge amount of information makes the neuroscientists’ journey towards synthetically replicating the human brain extremely challenging. However, the steps made so far are at least encouraging. The robot brain already has the ability to perform “general intelligent action” and to experience a certain degree of consciousness.

Still, scientists agree that AI is far from delivering human-like behavior and the biggest drawback seems to be self-awareness. Studies are behind on figuring out how to equip the robot brain with self-recognition, the key feature that allows neural networks to make internal choices on their own, deviating from initial algorithms.

‘One of the pitfalls for machines becoming self-aware is that consciousness in humans is not well-defined enough, which would make it difficult if not impossible for programmers to replicate such a state in algorithms for AI,’ researchers reported in a study published in October 2017 in the journal Science.

Another unanswered question that stands in the way of humanizing AI is ‘How to program the robot brain to do what we mean not what we say?’. This concept is called AI alignment. For example, AI is told to eradicate a disease, meaning to find a cure to that particular disease, not to kill all people suffering from it. This problem of ‘misspecified objective functions’ makes it difficult for programmers to reliably indicate what they really want.

Should we trust the robot brain?

If let’s say, we were to overcome these barriers and build complex machines, with human capabilities and a mind of their own, would this development be a safe bet? What will robots do with all the power we gave to them?

“They’re going to be smarter than us and if they’re smarter than us then they’ll realize they need us… “, says Steve Wozniak, co-founder of Apple. In a more recent interview though, he stated that “he is skeptical that computers will be able to compete with human intuition“.

High-profile physicist Stephen Hawking believes: “Success in creating effective AI could be the biggest event in the history of our civilization. Or the worst. We just don’t know. So we cannot know if we will be infinitely helped by AI, or ignored by it and side-lined, or conceivably destroyed by it.” Hawking explained that to avoid this potential reality, creators of AI need to “employ best practice and effective management.”

AI safety

To address possible threats, the Future of Life Institute has recently launched the second AI Safety Research program. “What we really need to do is make sure that life continues into the future. […] It’s best to try to prevent a negative circumstance from occurring than to wait for it to occur and then be reactive,” declared Elon Musk on keeping AI safe and beneficial.

While it is clear that this is a difficult challenge, there are several voices that believe AI Safety is unnecessary and shouldn’t be addressed yet. Andrew Ng, a global leader in AI, said it’s like “worrying about overpopulation on Mars”, “it’s way too early for AI safety to be a concern.”

The concept of AI began in antiquity, with myths and rumors conveying superintelligent machines designed by master craftsmen. It was only thousands of years after, in 1956, that the field of AI research was founded. Ever since people have started to make predictions of what does a future of synthetic brain mean for humankind.

The truth is that all we have so far are speculations and what ifs. Although technology is evolving at a fast-pace, human-like robots are probably still decades away. And were they to develop superintelligent features, there is no way we can predict the way they will see and relate to humans.